· AI Engineering · 2 min read

How LLMs Solve the Sloppy Input Problem

LLMs excel at making sense of messy, unstructured input. That shifts the burden of precision from people to systems. This capability unlocks massive opportunities in business.

LLMs solve a problem we never could before: making sense of sloppy input. Most of life is unstructured, noisy, and out of order. LLMs figure out what’s going on, fill the gaps, and keep the work moving. An important trend is coming: The burden of precision shifts from people to systems. This opens many new opportunities for those who know how to capitalize on it.

We all get headaches with Excel. One wrong data type or a mixed unit and the whole sheet blows up. You dig through cells, fix formats, and try again. With an LLM in the loop, it spots the mismatch, cleans the value, and fixes the formula. You stay in flow and end up a lot more productive.

LLMs are a lifeline for robotics. The real world is very messy; no way we can teach rule-based code for robots to handle every real-life situation. With their sloppy-input capability, LLMs extract what matters and ignore the noise. For example, a robot can read a customer’s facial emotion in real time to decide what to do next without a dedicated emotion-detection model.

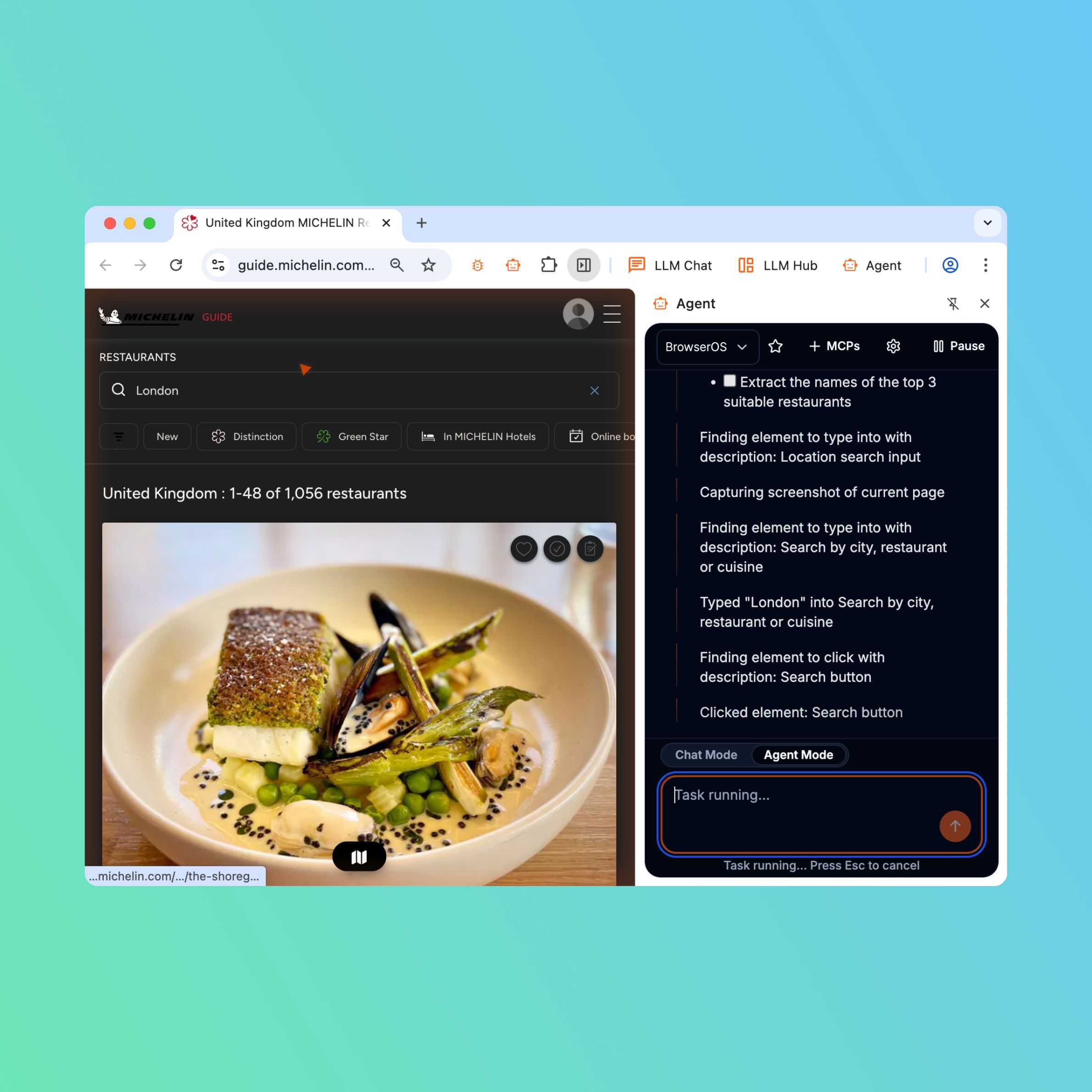

Healthcare, office work among many other domains will get a huge boost. Patients can describe symptoms in a fuzzy way, and the LLM still decodes the real issue. Analysts can say “Pull a quick view of Q3 churn across countries,” or a stakeholder can ask “Something is off with these numbers?”, the LLM can turn both into code and runs it.

Another imporant benefit is LLMs can add resilience to your system. When systems fail, LLMs treat the error as messy input; it can diagnose, try fixes, retry with better settings, and escalate only when get stucked. Teams can teach it like an intern by show a few diverse examples, and the LLM learns the pattern and handles edge cases, cutting human intervention by multiple times.

As the burden of precision shifts from people to systems, new products emerge: chat-driven analytics, error-tolerant robots, self-healing software, and natural-language capability for every tool and many more. Enterprises that catch this wave will ship faster, cut handoffs, and raise quality. This productivity boost will compound and become an enormous advantage. Builders who embrace disorder and let LLMs impose order inherit the next advantage.