· AI Engineering · 3 min read

Periodic Labs: A star is born

The new startup is destined to be a star, but can it live up to these great expectations?

On 30 Sep 2025, Fedus and Kubus announced the launch of Periodic Labs on X. A bit unlucky that, at the same time, OpenAI was announcing the release of Sora 2, which took away the spotlight they deserved.

The startup already assembled its crew with high-profile researchers in physics, chemistry, and machine learning from top-tier labs, such as DeepMind, OpenAI, and Meta. The name shows their ambitious goal: “Periods of human history are named after materials: Copper Age, Bronze Age, Iron Age, Silicon Age, and Periodic Labs aims to discover the materials that define the next age.”

The strategy

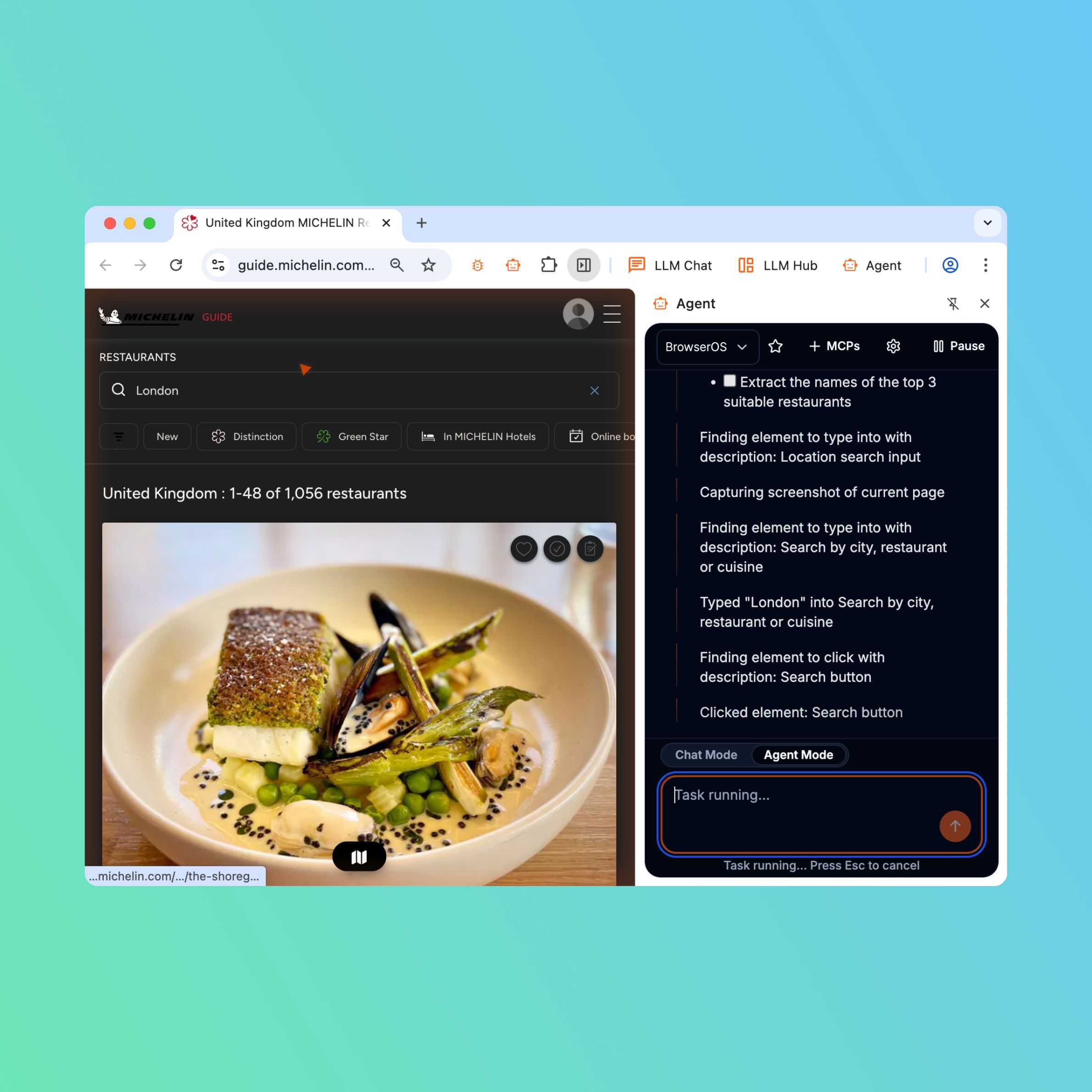

They focus on finding new physics and chemistry research discoveries. To do so, they aim to create AI scientists: LLM-powered robots capable of doing multi-turn tasks in laboratories: think, design experiments, optimise for results, and physically move robot arms in the lab to perform them.

That’s a good strategy, because of two critical properties of LLMs:

Frontier LLMs already consumed all the text available. Reusing this text to train them will yield diminishing returns.

LLMs are most-probable next-token prediction models, so they tend to go to the most popular paths seen in their training data.

Combining the two makes using LLMs to do research in fundamental fields like math difficult, as LLMs almost surely will follow the paths that mathematicians already discovered.

In physics and chemistry, on the other hand, hypotheses and theories are plenty, but they need experiments to validate. If they use robots to perform these experiments at massive scale, they can gather a lot of new data that LLMs have never seen. Then, combining the old knowledge with the new data, the chance of research discoveries is substantially higher. Indeed, in the whole history of humankind, all our inventions have come from our ingenuity, not acts of genius. And more experiments, more trial and error, will likely lead to new findings.

They will build autonomous laboratories capable of performing physical experiments at unprecedented speed to make new materials. They are confident that it will remove the bottleneck of traditional labs: slow experiments. The path is clear, and they already have $300M in funding to realize that vision.

The challenges

However, running a research-leaning startup is hard. Balancing between research and making products to get revenue is hard. A cautionary tale was Element AI, founded in 2017 by Yoshua Bengio, a Turing Award professor, one of the 3 godfathers of Deep Learning. Great expectations too, but it finally went bankrupt and was bought by ServiceNow in 2020.

Private investors are patient for 3 years, 5 years, but it is hard to wait for 10 years without seeing product-market-fit and a return on investment. To keep the startup afloat, they need to find a way to monetize in a couple of years.

With the ambition of making high-temperature semiconductors, no doubt there will be a long list of customers eagerly writing them big checks. The only question is whether their AI can do novel academic research and come up with new inventions. That will become clear after a couple of years, maybe together with the answer to whether we reach AGI or not.